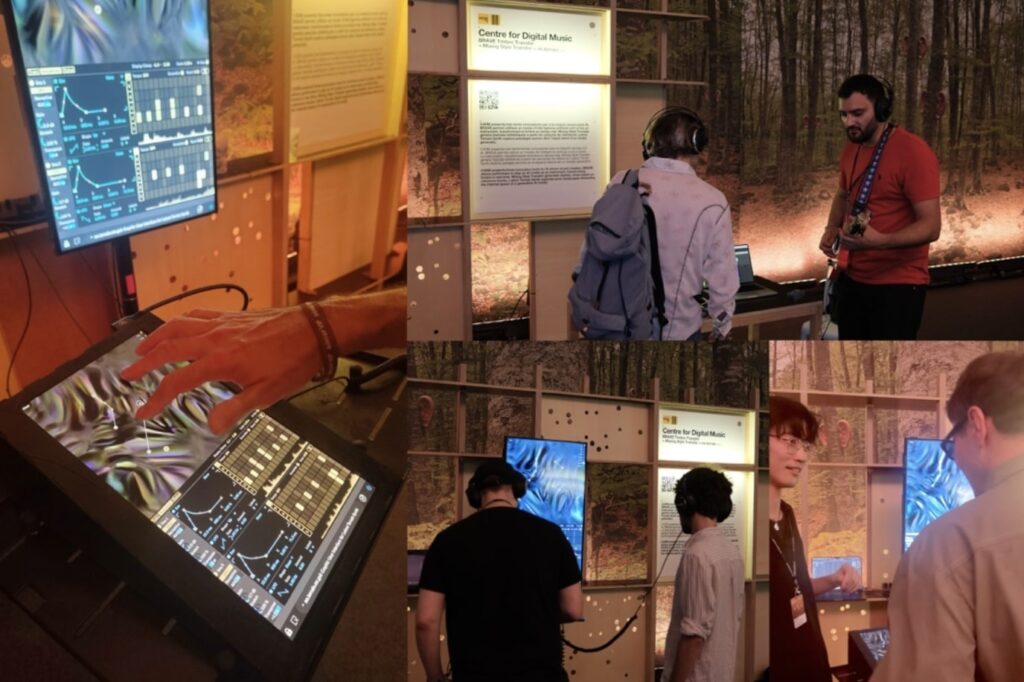

AIM at Sónar+D 2025

AIM at Sónar+D 2025 Project Area. Photos taken by Anna Xambó and Shuoyang Zheng

Sónar is a pioneering festival that’s reflected the evolution and expansion of electronic music and digital culture since its first edition in 1994. The interactive exhibition space, Project Area at Sónar+D, showcases state-of-the-art technology, innovative design, radical thinking, and cutting-edge research side-by-side in the heart of the music festival Sónar by Day.

At Sónar+D Project Area, AIM members Shuoyang Zheng and Franco Caspe joined the AI & Music exhibition area powered by S+T+ARTS to present their innovative tools for AI-driven sound creation. In addition, AIM members Christopher Mitcheltree represented Neutone to present the cutting-edge audio plugins.

Franco Caspe presented BRAVE, a timbre transfer tool that allows performers to play an AI model as an instrument, transforming timbre in real-time. Shuoyang Zheng presented Latent Terrain Synthesis, an innovative method to explore sonic landscapes dissected from the internal space of a generative AI model.

Photo by Xavi Bové

The AI Performance Playground took place between 11th and 14th June as part of Sónar+D 2025, co-organised by C4DM Senior Lecturer Anna Xambó, powered by S+T+ARTS, with support from La Salle-URL. This collaborative hacklab brought together artists, coders, musicians, DIY creators, and creative technologists to explore and deepen their use of machine learning tools, AI, and other related technologies for musical performance. AIM member Teresa Pelinski participated in the hacklab and joined a collaborative performance at SonarÀgora – open to the general public at Sónar by Day.

AIM members Christopher Mitcheltree and Shuoyang Zheng, together with Rebecca Fiebrink (University of the Arts London) and Nao Tokui (Neutone) joined the enlightening talk panel during the hacklab with Ben Cantil (DataMind) to discuss the challenges and opportunities of being an artist using AI tools.

First AES International Conference on Artificial Intelligence and Machine Learning for Audio (AIMLA 2025), Queen Mary University of London, Sept. 8-10, 2025, Call for contributions

First AES International Conference on Artificial Intelligence and Machine Learning for Audio (AIMLA 2025), Queen Mary University of London, Sept. 8-10, 2025, Call for contributions