Best student paper and outstanding reviewer awards at ISMIR 2025

We are delighted to share that AIM PhD student Ben Hayes, along with AIM supervisors Charalampos Saitis and George Fazekas, have received the best student paper award at the ISMIR 2025 conference. The paper “Audio Synthesizer Inversion in Symmetric Parameter Spaces With Approximately Equivariant Flow Matching” proposes using permutation equivariant continuous normalizing flows to handle the ill-posed problem of audio synthesizer inversion, where multiple parameter configurations can produce identical sounds due to intrinsic symmetries in synthesizer design. By explicitly modeling these symmetries, particularly permutation invariance across repeated components like oscillators and filters, the method outperforms both regression-based approaches and symmetry-naive generative models on both synthetic tasks and a real-world synthesizer (Surge XT).

We are delighted to share that AIM PhD student Ben Hayes, along with AIM supervisors Charalampos Saitis and George Fazekas, have received the best student paper award at the ISMIR 2025 conference. The paper “Audio Synthesizer Inversion in Symmetric Parameter Spaces With Approximately Equivariant Flow Matching” proposes using permutation equivariant continuous normalizing flows to handle the ill-posed problem of audio synthesizer inversion, where multiple parameter configurations can produce identical sounds due to intrinsic symmetries in synthesizer design. By explicitly modeling these symmetries, particularly permutation invariance across repeated components like oscillators and filters, the method outperforms both regression-based approaches and symmetry-naive generative models on both synthetic tasks and a real-world synthesizer (Surge XT).

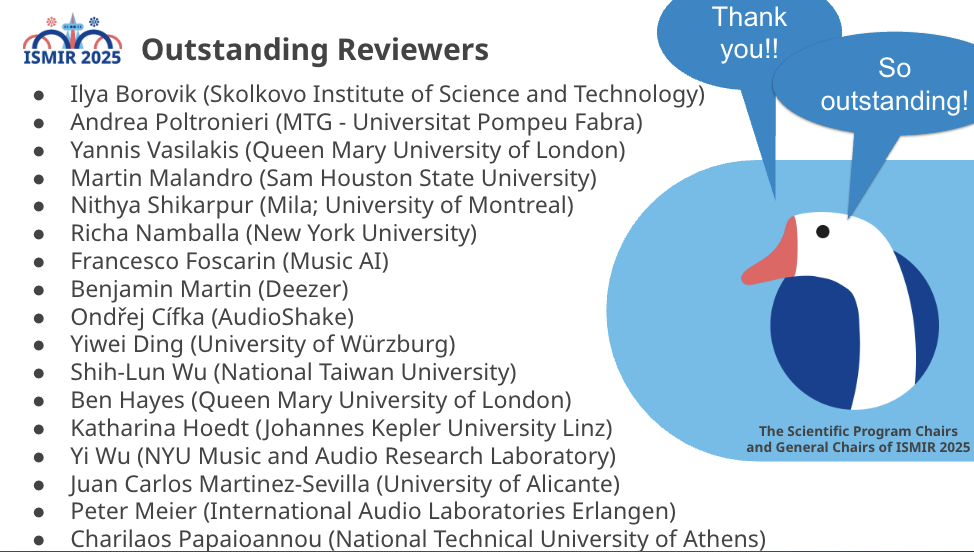

We are also happy to share that two AIM PhD students, Yannis Vasilakis and Ben Hayes, were recognised as outstanding reviewers.

This wraps up a fantastic week at ISMIR 2025 in Daejeon, South Korea, with very strong AIM participation. Pictured are current and past C4DM members, including many AIM members.

This wraps up a fantastic week at ISMIR 2025 in Daejeon, South Korea, with very strong AIM participation. Pictured are current and past C4DM members, including many AIM members.

We are delighted to share that Mary Pilataki, a PhD student at AIM, has received the Best Paper Award at the Audio Engineering Society International Conference on Artificial Intelligence and Machine Learning for Audio (AES AIMLA) 2025.

We are delighted to share that Mary Pilataki, a PhD student at AIM, has received the Best Paper Award at the Audio Engineering Society International Conference on Artificial Intelligence and Machine Learning for Audio (AES AIMLA) 2025. Congratulations to AIM members

Congratulations to AIM members